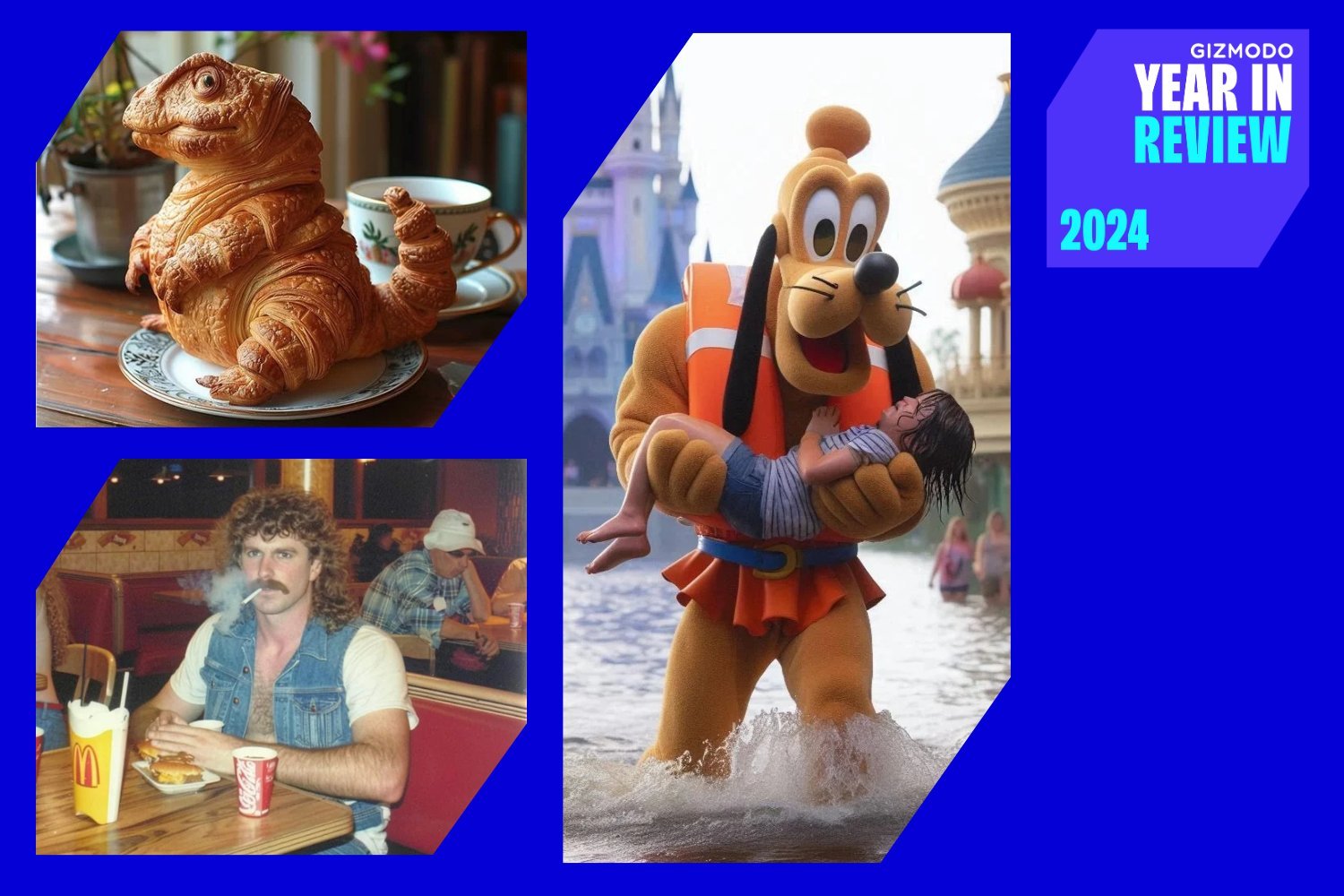

From smoking at McDonald’s to AI Tom Cruise, 2024 was a busy 12 months for viral hoaxes.

The web is completely flooded with pretend photographs, whether or not they’re pretend images, pretend movies or pretend animated GIFs. There are such a lot of fakes spreading on-line today that it may be laborious to maintain observe. We’ve bought a round-up of the fakes that went viral this 12 months and it hopefully serves as a useful reminder that you simply shouldn’t at all times consider your personal mendacity eyes.

Right here at Gizmodo, we’ve been fact-checking pretend photographs that go viral for nicely over a decade now. And whereas most of these photographs of the previous have been altered with clunky Photoshop-like instruments, generative AI is now fueling viral hoaxes.

However that doesn’t imply the whole lot in 2024 was AI-generated. In reality, the photographs that really tricked folks in 2024 have been usually extra conventional photoshop-style fakes. AI is ample, however folks have additionally developed a watch for when AI has been used. There’s an eerie vibe emanating from AI-generated photographs. And old-school Photoshop can nonetheless create photographs that don’t give the identical pink flags.

1) No Robo-Canine Have been Harmed

Have you ever seen that photograph of a canine trying to make candy and passionate like to a robotic canine? It went viral in 2024 on websites like Reddit, Instagram, and X. Nevertheless it’s pretend. Hopelessly, tragically pretend.

The unique photograph was first shared by the New York Fireplace Division on Fb approach again on April 18, 2023. And, as you’ll be able to see from the side-by-side we’ve created under, there’s no white canine going to city on the hearth division’s robotic canine.

It’s not completely clear who first made the photoshopped picture, however it exhibits up on the subreddit Firefighting on April 19, 2023, only a day after the unique was posted to Fb. Different accounts on a wide range of platforms additionally posted the manipulated photograph on April 19, 2023, together with this account on Instagram, making it tough to slim down who really created the joke.

The unique photograph gained consideration as a result of the New York Fireplace Division has a robotics unit, based in 2022, that may be deployed for search and rescue operations. The robotic was getting used that day throughout a partial constructing collapse of a parking storage in Decrease Manhattan.

“The robotic canine or the drones, they’re in a position to stream the video on to our telephones, on to our command heart,” New York’s chief of fireside operations John Esposito informed reporters shortly after it occurred final 12 months in a video aired by CBS New York.

“That is the primary time that we’ve been in a position to fly inside in a collapse to do that and attempt to get us some info, once more, with out risking the lives of our firefighters,” Esposito continued.

Whereas it’s nice to see life-saving expertise being utilized in such cool methods, none of this solutions the query we’re struck with after seeing an admittedly photoshopped picture of a canine attempting to mount a robotic canine: Would this ever occur in actual life? Canine will famously attempt to hump absolutely anything, however there haven’t been any documented circumstances that we’re conscious of. However that will merely be defined by the truth that these four-legged robots aren’t quite common but.

Have you ever seen a robotic attempting to rise up shut and private with a robotic canine? Drop us a line or go away your story within the feedback. We’re most likely not going to consider you with out photographic proof. And given the ubiquity of synthetic intelligence instruments that make such photographs simpler to create than ever, we nonetheless most likely received’t consider you.

Oh, the perils of residing sooner or later. Photographic proof isn’t price very a lot today. However a humorous altered picture continues to be a humorous altered picture, it doesn’t matter what period you reside in.

2) All Crime is Authorized Now

Did you see images on social media in 2024 of a road signal that reads, “Discover, Stolen items should stay underneath $950″? The images have been taken in San Francisco and seem like referring to a right-wing disinformation marketing campaign that claims theft has been legalized in California. However the indicators weren’t put there by any authorities entity. They’re a prank.

When the images first surfaced on X earlier this 12 months, many individuals speculated the photographs might have been photoshopped or created with AI expertise in a roundabout way. One of many images even acquired a Group Observe submitted by customers that claimed as a lot. However that’s not true.

They’re images of a “actual” signal within the sense that they weren’t created utilizing applications like ChatGPT or Photoshop. The signal was captured from a number of angles, as you’ll be able to see above, serving to show that this signal was really positioned there in entrance of the Louis Vuitton retailer.

And whereas they give the impression of being professionally executed, the indicators had delicate clues indicating they weren’t actual (together with screws that look totally different from these utilized by town), which proves they have been put in by nameless pranksters. The San Francisco Division of Public Works and the Workplace of the Metropolis Administrator confirmed to Gizmodo by e-mail that the signal “was not Metropolis sanctioned and never posted by the Metropolis.”

What’s the thought behind the signal? It’s almost definitely a reference to the truth that the state of California raised the edge for when shoplifting goes from a misdemeanor to a felony again in 2014. The brink in California is $950, which some folks assume is just too excessive. Fox Information has executed a number of segments on the subject, claiming that California has “legalized” shoplifting, which is full nonsense. The thought is fueled by cellphone movies aired by conservative media that give viewers the impression that theft is continuous within the state.

The issue, after all, is that many different states—together with these with Republican governors and legislatures—have a lot larger thresholds for when shoplifting turns into a felony. In reality, as former Washington Submit prison justice reporter Radley Balko wrote in 2023, a whopping 34 states have a better threshold than California. That features Republican-run states like Texas ($2,500) and South Carolina ($2,000). Evidently, no person is claiming that Texas and South Carolina have legalized theft.

Skilled-looking indicators clearly made by pranksters have been popping up in San Francisco for years. There was the signal close to OpenAI’s headquarters that warned all actions have been being monitored by safety cameras and used for coaching AI, others declaring a “no-tech zone” again in 2015 geared toward vacationers, and the one which learn “we remorse this bike lane” in 2023.

Given the variety of pretend official-looking indicators which have sprung up in San Francisco over the previous decade, it appears unlikely we’ll ever be taught who was behind the “stolen items” signal that’s been going viral. However we all know that’s not an actual signal put out by town. And regardless of what you would possibly see on Fox Information or X, retail shops in California aren’t actually a lawless Mad Max hellscape. You need to drive the freeways in L.A. to expertise that.

3) The Strains on Maps Dividing States Aren’t Actual Both

Did you see movies in 2024 claiming to indicate a degree the place the Pacific Ocean meets the North Sea, suggesting the 2 don’t combine? It’s a nonsense declare for therefore many causes, however that didn’t cease one video from racking up over 20 million views about it.

“No person can clarify why oceans meet and by no means combine,” one X consumer with a tweet that includes the viral video wrote.

“The great thing about the oceans,” one other X consumer wrote in a tweet that was seen over 12 million times.

Each of the accounts, it ought to be famous, have blue checkmarks which may be bought for $8 per 30 days. Earlier than Elon Musk purchased the platform, the verification system was supposed to fight impersonators, however it now provides anybody who can rub two mind cells collectively the flexibility to get boosted by the X algorithm.

Why is that this video so dumb? If you happen to pull up a map of the North Sea, you’ll be able to observe for your self that it’s surrounded by England, Norway, Denmark, and the Netherlands. The closest main ocean is the Atlantic and it’s nowhere close to the Pacific. Merely put, the North Sea and the Pacific Ocean by no means meet. However that’s only one cause it’s so painful to see this video getting traction on a serious social media website.

For no matter cause, the previous few years have produced numerous movies of individuals claiming to indicate the place oceans meet however don’t combine. Sometimes, these viral movies present locations the place saltwater and freshwater collide, making it appear to be there’s a line separating the 2. These movies may be significantly deceiving when a large river meets the ocean. Cheap folks can assume they’re viewing one thing shot on the open ocean, not realizing the quite simple clarification for what they’re seeing.

Ask any oceanographer, as USA Right now did in a debunker from 2022, they usually’ll inform you that the oceans do “combine,” regardless of frequent posts on social media that there’s some sort of cause for them not mixing. One widespread declare on platforms like X, TikTok, and YouTube is that totally different iron and clay content material forestall the oceans from mixing, an concept that merely isn’t true.

However the concept you could draw a line exactly exhibiting the place main oceans start and finish is tremendously well-liked. And that concept appears significantly widespread amongst individuals who wish to insist science doesn’t perceive why “oceans don’t combine.”

“That is the Gulf of Alaska the place 2 oceans meet however don’t combine. Inform me there may be No God and I’ll ask you ‘Who commanded the mighty waves and informed them they might go no additional than this’! What a completely AMAZING God…..” one viral publish from Fb claimed.

Properly, really we do perceive. As a result of the oceans do combine. Even when extremely dense folks on social media inform you in any other case.

4) Mike Would By no means

A video showing to indicate MyPillow CEO Mike Lindell driving whereas not being attentive to the street went viral on X in 2024. And one consumer even claimed Lindell was “hammered” whereas driving. However the video is considerably altered.

The video was seen million of instances on X alone, with many individuals clearly understanding it’s an edited video. However the video, which is initially from 2023, began to be shared without context. And a few folks thought it was actual.

My dude is HAMMERED pic.twitter.com/6PfQ77Ii3b

— x_X_I Love the Universe_X_x (@Universe__Lover) February 23, 2024

X’s program of crowdsourced fact-checking, Group Notes, ultimately annotated the pretend video. The unique video, whereas not completely complimentary to Lindell, clearly exhibits the MyPillow CEO speaking on to the digital camera whereas his automobile isn’t moving.

“Strung-out wanting Mike Lindell says he actually wants folks to purchase a few of his new slippers after he was canceled by retailers and procuring channels,” liberal influencer Ron Filipkowski wrote on X again in March 2023.

Strung-out wanting Mike Lindell says he actually wants folks to purchase a few of his new slippers after he was canceled by retailers and procuring channels. pic.twitter.com/MM0pspaPti

— Ron Filipkowski (@RonFilipkowski) March 27, 2023

The video seems to have been initially edited by comedy author Jesse McLaren, who added a transferring background and engine noises to make it seem like Lindell is driving. McLaren’s video was retweeted on prime of the unique, making it clear his intention wasn’t to deceive anybody however as an alternative to only make a joke.

“I made it appear to be the automobile’s transferring and it’s 100x higher,” McLaren tweeted last year.

However the video began making the rounds once more, with out making it clear the video had been manipulated. That occurs regularly, because it just lately did when a joke about Gmail being shut down escaped the circle of unique posters who knew it was a joke.

“My dude is HAMMERED,” an X account referred to as Universe Lover wrote on Friday.

It’s not clear what software program McLaren used to edit this video, however with AI video era instruments getting higher with every passing month, it received’t be lengthy till everybody has the flexibility to create nearly something (at the least in brief type) they will think about with only a few phrase prompts. The viral web is barely going to get extra complicated with AI developments simply over the horizon.

5) The McDonald’s Goof

Have you ever seen a photograph on social media just lately that seems to indicate a person in Eighties-style garments smoking a cigarette in McDonald’s? The picture went viral, racking up tens of thousands and thousands of views in 2024. Nevertheless it’s utterly pretend. The picture was made utilizing generative AI.

The picture, which includes a man with lengthy curly brown hair and a mustache, immediately grabbed consideration when it was first posted to X earlier this year. The person seems like he’s smoking a cigarette whereas emitting a puff of smoke, one thing that was allowed in some McDonald’s areas of the twentieth century earlier than clear indoor air legal guidelines grew to become the norm.

However in the event you take a more in-depth have a look at the picture, there are some telltale indicators that his “photograph” was created utilizing AI. For starters, simply check out the fingers and arms. Do you discover how unusually lengthy the person’s left hand is, with none discernible wrist? It seems like his arm simply morphs into fingers which might be extremely lengthy.

Subsequent, simply check out the writing within the picture. The pink cup on the desk, which seems to be an try by the AI to imitate Coke’s branding, is a swirl of nonsense. And whereas McDonald’s signature golden arches look correct on the french fry field, the packaging for McDonald’s fries has sometimes been predominantly pink, not yellow. It additionally seems like a straw is protruding from the container. I’ve by no means tried to eat fries by means of a straw, however one imagines that’s a tough job.

The person behind the principle topic of the “photograph” seems much more warped, with each odd-looking arms and a face to match. The background man’s hat additionally seems like an try at each a shirt model and a bucket hat from the 2000s, which places the principle topic in an much more perplexing state of affairs.

And what’s the writing within the upper-right nook imagined to say? We solely have partial letters, however it seems to say Modlidani within the form of what’s approximating a McDonald’s signal.

Final however not least, try the principle topic’s shirt state of affairs. The person seems to be bare-chested whereas sporting a denim vest however the sleeves appear to be a white t-shirt. It doesn’t make a lot sense.

Clearly the explanation this picture went viral is that it speaks to some model of the previous that doesn’t exist anymore, whether or not it’s a man with that haircut or simply smoking generally. Smoking tobacco in public locations was the norm earlier than it was phased out in a decades-long course of throughout the U.S. in an effort to guard public well being. Many states first created “smoking” and non-smoking” sections of eating places within the late twentieth century earlier than smoking was banished altogether in most indoor areas by the early twenty first century. There are nonetheless a handful of states that enable indoor smoking of cigarettes in some venues however they’re changing into rarer with every passing 12 months.

If you happen to noticed this picture in your feeds and didn’t instantly register it as AI, you’re not alone. We frequently debunk photographs and didn’t even give it a second thought once we first noticed it. However that maybe speaks to how a low-stakes picture doesn’t get fairly as a lot scrutiny when it’s going viral on social media platforms.

Frank J. Fleming, a former author for conservative information satire website the Babylon Bee, identified on X how many individuals didn’t have their guard up when sharing the pretend smoking picture.

“That is such an attention-grabbing case of individuals being fooled by an AI picture as a result of the stakes are so low. There are such a lot of apparent indicators that is AI, however most would miss them as a result of they’re not a part of the main target of the picture and since this isn’t a case the place you assume somebody could be tricking you, you haven’t any cause to research it that carefully,” Fleming wrote on X.

We’re reminded of the viral picture of Pope Francis sporting a giant white puffer coat in 2023, one other occasion the place folks have been fast to consider it could be actual just because it didn’t register as one thing that mattered all that a lot, but was nonetheless amusing to see. Who cares if the Pope wears a cool jacket? Properly, loads of folks if it’s simply an instance of fabric extra or fashion-consciousness from a determine who’s imagined to be above earthly considerations.

6) Yummy However Pretend

Did you see that that croissant made within the form of a dinosaur that went viral on websites like Reddit and X? It’s extremely cute. However we remorse to tell you this “Croissantosaurus” was made utilizing generative AI. In reality, in the event you observe down the place this pastry was first posted, you’ll discover a complete pretend restaurant that by no means existed.

“Babe, what’s flawed? You haven’t eaten your Croissantosaurus…” a viral tweet joked on X. The tweet racked up thousands and thousands of views. However a reverse-image search of the admittedly cool-looking pastry will deliver you to this Instagram web page for a restaurant in Austin, Texas referred to as Ethos Cafe. And in the event you discover one thing bizarre concerning the restaurant, you’re not alone.

“Unleash your internal paleontologist and savor our new Dino Croissants. Select your favourite dinosaur and pair it with a pleasant cappuccino. A prehistoric deal with for a contemporary indulgence!” the Instagram publish reads.

Unusually, Ethos doesn’t really exist and seems to be some sort of hoax or artwork venture by nameless creators. Austin Month-to-month reported on the pretend restaurant final 12 months and a subreddit for Austin Meals picked aside lots of the bizarre issues about this new restaurant when it first surfaced on-line.

For starters, all of the employees on the restaurant seem like AI-generated. Do you see something unusual about this bartender, apart from the truth that he’s named Tommy Kollins? That’s proper, he seems to be gripping that drink with a hand that has six fingers.

AI picture mills like DALL-E, Midjourney, and Steady Diffusion usually have hassle producing arms precisely. And whereas many enhancements have been made since they have been first launched, arms can nonetheless be tough. The web site additionally has odd directions for acquiring a reservation, which seems to be a commentary on the lengths some folks will go to with a purpose to eat at elite eating institutions.

“Reservations go reside at 4:30 am each first Monday of each month. By utilizing a number of gadgets concurrently, you’ll be able to entry our system extra simply, scale back competitors from different customers, reply quicker, and have a backup possibility in case of any technical points or web connection issues. This strategy maximizes your alternative to safe your required reservation promptly and effectively,” the web site reads.

Gizmodo tried to contact the Ethos Cafe by means of the shape on its web site however didn’t hear again. Simply know that the Croissantosaurus is lovable however completely pretend.

7) Land Earlier than Time Fakes

Film posters showing to indicate an upcoming remake of the kids’s dinosaur film The Land Earlier than Time (1988) elicited sturdy feelings on social media in 2024. However regardless of in the event you assume a remake is a good suggestion, the film isn’t taking place. At the very least not within the foreseeable future.

The rumors about this pretend dino remake can seemingly be traced to a Fb web page referred to as YODA BBY ABY, which first wrote concerning the potential film in late 2023.

“Get able to embark on a prehistoric escapade like by no means earlier than! Disney and Pixar be a part of forces to deliver you a blinding remake of The Land Earlier than Time, the place Littlefoot and buddies journey by means of lush landscapes and encounter enchanting surprises,” the pretend publish reads. “Brace your self for a January 2025 launch – a dino-mite journey awaits!”

However there’s no proof that any remake of The Land Earlier than Time is in manufacturing by Disney and Pixar, a lot much less popping out in January 2025. One other viral declare prompt the film is popping out in December 2024, however there’s no proof for that both.

The prospect of a remake has been extremely polarizing, particularly as a result of individuals who liked the unique film took difficulty with the way in which the dinosaurs regarded on these pretend film posters.

“I hope that is some sort of sick joke that somebody made, as a result of that’s not Little Foot,” on TikTok consumer commented final week.

Different TikTok customers stated they have been “disrespecting the spirit of Land Earlier than Time” and “disrespecting Littlefoot” with the brand new character designs.

Whereas the unique 1988 movie, govt produced by George Lucas and Steven Spielberg, is essentially the most beloved, there have been really 13 sequels. Solely the 1988 model acquired a theatrical launch although, with all the follow-ups going straight to house video. The final within the sequence was launched in 2016 and is titled Journey of the Courageous.

But when I’m Common Footage I’m wanting on the sturdy opinions presently circulating on-line and seeing greenback indicators in my eyes. If folks have sturdy emotions concerning the movie sequence, that definitely counts for one thing. Millennial nostalgia may be an especially worthwhile enterprise because the era enters center age, whether or not it’s the thirtieth iteration of Imply Ladies or our favourite animated dinosaurs. Get to work, film execs.

8) Hate-monger Fakes

A tweet that appeared to indicate far-right influencer Candace Owens ridiculing Ben Shapiro with a reference to a “dry” checking account went viral in 2024. The tweet refers to “Ben’s spouse” and even seems prefer it had been deleted, primarily based on a viral screenshot. However the tweet isn’t actual. It was made by a comic.

“After getting fired immediately, my checking account is gonna be dry for some time, however not as dry as Ben’s spouse,” the pretend tweet from Owens reads.

Photoshopped tweets about Shapiro and “dryness,” let’s assume, have been widespread on social media platforms like X ever because the conservative commentator awkwardly learn lyrics to Cardi B’s hit track “WAP” again in 2020.

Owens joined the Every day Wire, a conservative media community co-founded by Shapiro, in 2021 to host a weekly present. However Owens departed the community, in line with a social media publish by Every day Wire CEO Jeremy Boreing. It’s nonetheless not clear whether or not Owens stop or was fired, however it’s straightforward to guess why Owens and the Every day Wire parted methods.

Owens had clashed with Shapiro, who’s Jewish, in current months as she’s peddled antisemitic conspiracy theories, claiming on her present that Jewish “gangs” do “horrific issues” in Hollywood. Owens just lately appreciated a tweet about Jews being “drunk on Christian blood,” which seems to have been the final straw for the community.

The pretend tweet that’s been made to appear to be it’s from Owens appears to have tricked fairly a number of folks, together with one X consumer who wrote, “The singular one and solely time I’ll give her props that publish is gold.”

One other consumer commented, “Why do folks at all times publish their finest bangers after which fucking delete them after everybody has already seen it a thousand instances.” The reply, after all, is that they didn’t really tweet them within the first place.

The tweet has additionally made it to at the least one different social media platform, the X rival BlueSky, the place it was getting handed round as actual.

The tweet was really created by an X consumer who goes by the identify Trap Queen Enthusiast. And if that identify sounds acquainted, it’s most likely as a result of they usually go viral utilizing pretend screenshots which might be made to appear to be they’re deleted tweets. In reality, earlier Gizmodo debunked a preferred tweet from Lure Queen Fanatic that was made to appear to be it had come from musician Grimes, the previous accomplice of billionaire Elon Musk.

The pretend tweet from Owens racked up over 900,000 views in a brief period of time. However Group Notes, the crowdsourced fact-checking program at X, took a really very long time to notice it was pretend.

A part of the genius of those pretend tweets is that it’s extremely tough to fact-check except the gamers concerned. Passing round a screenshot with that little textual content “This publish has been deleted” on the backside makes it nearly unimaginable for the typical particular person to confirm whether or not it ever existed.

9) AI Tom Cruise Sounds Off

Did you see clips from a brand new documentary narrated by Tom Cruise referred to as Olympics Has Fallen, a play on the title of the 2013 film Olympus Has Fallen? The brand new movie claimed to doc corruption on the Olympic Video games in Paris, France. Nevertheless it’s pretend. Cruise’s narration was created with synthetic intelligence and the “documentary” is definitely the work of disinformation brokers tied to the Russian authorities, in line with a report from researchers at Microsoft.

The pretend documentary is tied to 2 affect teams in Russia, dubbed Storm-1679 and Storm-1099 by Microsoft, and it’s straightforward to see how some folks have been duped. The movie is available in 4 9-minute episodes, every beginning with Netflix’s signature “ta-dum” sound impact and red-N animation.

“On this sequence, you’ll uncover the internal workings of the worldwide sports activities trade,” the pretend Tom Cruise says as dramatic music performs within the background. “Particularly, I’ll shed some mild on the venal executives of the Worldwide Olympic Committee, IOC, who’re slowly and painfully destroying the Olympic sports activities which have existed for hundreds of years.”

However there are many indicators that this film is bullshit to anybody paying consideration. For starters, Cruise’s voice is real looking however generally has a stilted supply. The massive giveaway, nevertheless, could be phrases utilized by the Russian marketing campaign that wouldn’t be utilized by People. For instance, the primary episode features a line from the pretend Cruise narration the place he talks a couple of “hockey match” quite than a hockey sport. The phrase “match” is way more widespread in Russia for sports activities like soccer and any actual fan of ice hockey within the U.S. would name it a sport, not a match.

There are additionally instances when the pretend Cruise narration sounds prefer it’s studying technique notes made by the individuals who concocted this piece of disinformation. A lot of the documentary spends time attempting to tear down the organizers of the Olympics as hopelessly corrupt, and AI-generated Cruise tries to tie it to one of many actor’s most well-known roles within the Nineties.

“In Jerry Maguire, my character writes a 25-page-long agency mission assertion about dishonesty within the sports activities administration enterprise. Jerry wished justice for athletes, which makes him extraordinarily relatable,” the narration says.

It’s laborious to think about a line like that making it into an genuine documentary.

The pretend documentary additionally has some modifying errors that stick out as significantly odd, like when the AI Cruise inexplicably repeats a line, the audio briefly chopping out for no discernible cause. Your entire movie is accessible on the messaging app Telegram, the place Gizmodo watched it.

The pretend documentary first surfaced in June 2023, in line with Microsoft, however bought renewed consideration simply earlier than the beginning of the Olympics. As Microsoft identified in a report, the Storm-1679 group tried to instill concern in folks about attending the 2024 Olympics in France. Pretend movies purporting to indicate warnings from the CIA additionally claimed the video games have been susceptible to a serious terrorist assault. Different movies made to appear to be they’re from respected information shops, like France24, “claimed that 24% of tickets for the video games had been returned on account of fears of terrorism.” That’s merely not true.

The disinformation brokers additionally tried to stoke concern across the battle in Gaza, claiming there may very well be terrorism in France tied to the battle.

“Storm-1679 has additionally sought to make use of the Israel-Hamas battle to manufacture threats to the Video games,” Microsoft wrote within the new report. “In November 2023, it posted photographs claiming to indicate graffiti in Paris threatening violence towards Israeli residents attending the Video games. Microsoft assesses this graffiti was digitally generated and unlikely to exist at a bodily location.”

That stated, among the info within the pseudo-documentary is definitely true. For instance, the movie discusses the historical past of Wu Ching-kuo, the top of novice boxing’s governing physique Aiba, who was suspended for monetary mismanagement claims. Different claims about Olympic officers are additionally true, in line with the information sources out there on-line. However that’s to be anticipated. Essentially the most profitable propaganda mixes reality and fiction to make folks not sure about what the reality could be.

All we all know for sure is that Tom Cruise by no means narrated this film. And if somebody is attempting to hijack the credibility of a serious film star to unfold their message, you need to at all times be skeptical of no matter they need to say—particularly as bots assist unfold that media extensively throughout social media platforms.

“Whereas video has historically been a robust instrument for Russian IO campaigns and can stay so, we’re prone to see a tactical shift in direction of on-line bots and automatic social media accounts,” Microsoft wrote. “These can provide the phantasm of widespread help by shortly flooding social media channels and provides the Russians a degree of believable deniability.”

10) Jimmy Lives

Tweets claiming that former president Jimmy Carter died went viral again in July, with lots of the dumbest folks on social media serving to unfold the hoax—from Laura Loomer to Mario Nawfal. However there was one one that shared the pretend letter saying Carter’s demise who actually ought to know higher: Senator Mike Lee of Utah.

Brian Metzger from Enterprise Insider captured a screenshot of Lee’s tweet earlier than he deleted it. And whereas Lee was surprisingly respectful in his condolences for Carter’s household (contemplating he’s a Trump supporter), the precise textual content of the letter ought to have tipped him off.

Why? When you get to the fourth paragraph, issues get a bit bizarre, making it clear this isn’t one thing that ought to be taken critically.

Regardless of these successes as President, all his life President Carter thought of his marriage to former First Woman Rosalynn Carter his life’s biggest achievement. At her passing final November President Carter stated, “Rosalynn was a baddie. Jill, Melania, even throat goat Nancy Reagan had nothing on Rosalynn. She was the unique Brat. She gave me sensible steering and encouragement after I wanted it. So long as Rosalynn was on the planet, I at all times knew someone liked and supported me.” They have been married for 77 years.

If you happen to’re unfamiliar with the Nancy Reagan “throat goat” meme, we’ll allow you to google it by yourself time and away out of your work laptop. It’s additionally not possible that Carter’s demise announcement would make reference to Charli XCX’s “Brat.”

Lee’s workplace in Washington D.C. confirmed over the cellphone that he deleted the tweet after discovering out it wasn’t true however had no touch upon the place the senator first discovered the hoax letter.

Sen. Mike Lee (R-UT) seems to have been duped by a pretend letter saying Jimmy Carter’s demise.

The pretend letter quoted the previous president as calling his late spouse a “baddie” and the “unique Brat” who “throat goat Nancy Reagan had nothing on.” pic.twitter.com/Z0GYDCXwNy

— bryan metzger (@metzgov) July 23, 2024

The pretend letter may be traced again to an X account referred to as @Bocc_accio, which included the caption “BREAKING” with “Former President Jimmy Carter has handed away. He was 99 years outdated.” However anybody who really learn the letter ought to have been in a position to determine it out. If you happen to learn the alt-text on the tweet the picture description even explains: “President Carter continues to be alive and in hospice care. This was an experiment to see how gullible persons are to sensationalist headlines.”

🚨BREAKING🚨 — Former President Jimmy Carter has handed away. He was 99 years outdated. pic.twitter.com/nHuaf17uPG

— Boccaccio ✺ (@Bocc_accio) July 23, 2024

Oddly, it seems among the pretend Carter notes have been photoshopped to exclude the stuff about Nancy Reagan. Loomer, a far-right grifter who will fall for almost any hoax and helps unfold a lot of her personal, really shared a cleaner model of the letter. However she’s additionally been spreading numerous different dumb hoaxes in 2024, like claiming that President Joe Biden is actually on his deathbed and has entered hospice care. Some right-wing accounts have been just lately going as far as to insist they’d delete their accounts in the event that they have been flawed about Biden dying imminently. It appears apparent that they’ll simply wait till Biden ultimately dies and declare they have been proper all alongside.

11) Hurricane Hits Disney

Walt Disney World in Orlando, Florida, shut down operations one night time in October on account of Hurricane Milton and the tornadoes that sprung up earlier than the storm even made landfall. However images of the theme park flooded in water began to pop up, regardless of being utterly pretend. Russian state media, TikTok, and X all helped them unfold.

Russian state media outlet RIA made a publish on Telegram with three photographs that appeared to indicate Disney World submerged. “Social media customers publish images of Disneyland in Florida flooding because of Hurricane Milton,” the RIA account stated in line with an English language translation.

How did we all know these photographs are pretend? For starters, the buildings aren’t proper in any respect. If you happen to evaluate, as an illustration, what it seems like on both facet of Cinderella’s Fort on the Magic Kingdom, you don’t see these buildings that seem within the pretend picture.

However you don’t even must know what the actual Cinderella’s Caste seems wish to realize it’s pretend. Simply zoom in on the turrets of the constructing itself. They’re not rendered utterly, and a few seem at very odd angles that make it clear these are AI-generated photographs.

The photographs shortly made their approach again to X, the location previously often called Twitter earlier than it was bought by Elon Musk. And it’s unimaginable to overstate simply how terrible that platform has grow to be. From Holocaust denial tweets which might be getting thousands and thousands of views to breaking information tweets that present AI photographs of kids crying, the entire place is effervescent over with rubbish.

The pretend photographs of Disney World have been shared by right-wing influencer Mario Nawfal, a frequent purveyor of misinformation who’s usually retweeted by Musk himself. As only one current instance, Nawfal contributed to a Jimmy Carter demise hoax in July that was shared by different idiots like Laura Loomer and Republican Sen. Mike Lee of Utah. Nawfal’s tweet helped unfold the pretend Disney images even additional on X earlier than he lastly deleted them.

There have been additionally movies shared on TikTok exhibiting extremely over-the-top photographs of destruction at Disney World, like this one shared by consumer @joysparkleshine. The video seems to have been initially created as a joke by an account referred to as MouseTrapNews however is getting reposted and stripped of all context, taken critically by a sure section of the inhabitants.

Some feedback on the video embrace “I’m screaming the one place that made me really feel like a child once more” and “Good… possibly they may now present all of the underground tunnels underneath Disney subsequent. Those who know… KNOW!!”

That final remark is a reference to the QAnon conspiracy idea which asserts that youngsters are being trafficked by highly effective political figures and folks like Oprah Winfrey and Tom Hanks. Extremely, they consider that Donald Trump goes to avoid wasting these children. Sure, that Donald Trump.

Some AI photographs of the hurricane that have been created as a joke even began exhibiting up in varied Russian information shops. Just like the one under exhibiting Pluto in a life-jacket carrying a baby by means of floodwaters. The picture seems to have been earnestly shared by Rubryka.com, which credit the picture to Bretral Florida Tourism Oversight District, an X account dedicated to jokes about theme parks.

One other joke picture shared by that account, Bretral Florida Tourism Oversight District, confirmed a photograph of a ship caught on a big mountain rock, which anybody who is aware of Disney World will acknowledge as a everlasting fixture at Disney’s Storm Lagoon water park.

The solar is beginning to rise in Florida.

Jesus Christ. #hurricanemilton pic.twitter.com/EQwQOEgqnU

— Scott Walker (@scottwalker88) October 10, 2024

Different accounts on X have been sharing the QAnon theories, insisting that the destruction of Disney World would possibly lastly reveal the reality about youngster trafficking.

“Take a look at Mickey’s arms,” one significantly weird tweet with a picture of a Mickey Mouse clock reads. “Might they be exhibiting a date? October ninth. The day the storm hit Disney World. There aren’t any coincidences. The Army are clearing out the tunnels beneath. Used for human trafficking of kids and different horrible crimes.”

Take a look at Mickey’s arms. Might they be exhibiting a date? October ninth. The day the storm hit Disney World.

There aren’t any coincidences.

The Army are clearing out the tunnels beneath. Used for human trafficking of kids and different horrible crimes.

«Hurricane Milton hits Walt… pic.twitter.com/Z7rZH2uVbb

— Bendleruschka (@bendleruschka) October 10, 2024

Milton made landfall as a Class 3, which precipitated hundreds of canceled flights, battered houses and companies with punishing winds, in line with NBC Information. Disney posted an replace on its web site explaining that the whole lot could be opening again up a day later.

“We’re grateful Walt Disney World Resort weathered the storm, and we’re presently assessing the impacts to our property to arrange for reopening the theme parks, Disney Springs, and probably different areas on Friday, October 11. Our hearts are with our fellow Floridians who have been impacted by this storm,” the web site reads.

It’s a ridiculous surroundings for disinformation proper now. And that may seemingly proceed to be our actuality for the foreseeable future.

12) Pretend Claims About Tim Walz

The 2024 presidential marketing campaign was awash with pretend tales about Vice President Kamala Harris and her working mate Tim Walz, all unfold by supporters of Donald Trump. However nowhere are these tales extra ample than X, the social media platform previously often called Twitter, which was bought in 2022 by far-right billionaire Elon Musk.

What can we imply by ridiculous lies? Take a narrative that went viral on X in 2024, purporting to be a testimonial from a former scholar of Tim Walz. The particular person within the video, falsely recognized as a person named Matthew Metro, claimed they have been sexually abused by Walz. However anybody really from Minnesota would instantly discover some massive pink flags. For starters, the particular person claimed they have been a scholar at Mankato West, the highschool the place Walz labored within the Nineties and early 2000s. However the particular person mispronounces the phrase Mankato and in some way even the phrase Minnesota sounds bizarre.

🚨BREAKING NEWS JUST ANNOUNCED:

Tim Walz’s former scholar, Matthew Metro, posted a video on-line claiming he was sexually assaulted by Walz again in 1997. If that is true, she’s executed. The Kamala Harris/ Tim Walz combo is completed. It is over.#KamalaDumpsterFire pic.twitter.com/n6cgr70VrG

— MatthewLovesUSA (@MatthewLovesUSA) October 17, 2024

Why was this going viral? As a result of it was being promoted by X’s algorithm within the For You feed, as we personally skilled on October 6. However the Washington Submit spoke to the Matthew Metro, apparently a reputation recognized by the disinformation brokers by means of a publicly posted yearbook, who now lives in Hawaii.

“It’s clearly not me: The tooth are totally different, the hair is totally different, the eyes are totally different, the nostril is totally different,” the 45-year-old Metro informed the Washington Submit. “I don’t know the place they’re getting this from.”

Wired talked with analysts who consider the video is the work of a Russian-aligned disinformation operation referred to as Storm-1516, which additionally unfold the false declare that Harris participated in a hit-and-run in San Francisco in 2011. However Wired additionally believes the video was created AI, one thing that not all consultants are agreed on. All we all know for sure is that the video is clearly pretend and was being unfold inorganically on X.

13) Hollywood Mountain?

A surprising photograph of Hollywood Mountain, California went viral in 2024? One picture that made the rounds on social media platforms like Fb and BlueSky depicted what seems to be a lush inexperienced mountain with the L.A. skyline within the background. Nevertheless it’s utterly pretend. There’s not even an actual place referred to as Hollywood Mountain.

The picture seems to have initially been posted to Fb by somebody named Mimi Ehis Ojo, who has loads of different AI-generated photographs on their web page. And whereas anybody who lives in Southern California can most likely inform instantly that it’s pretend, it seems like some individuals who’ve by no means visited the U.S. are getting tricked into considering it’s actual.

“What number of mountains does America have so all of the bodily land options are full in America then we’re cheated by God,” one Fb consumer from Nigeria commented.

Author Cooper Lund identified on BlueSky that this type of confusion may very well be a recipe for catastrophe if international vacationers present as much as the U.S. anticipating to see a few of these fantastical scenes that have been generated by AI.

“Someday quickly we’re going to get a narrative about vacationers having bizarre meltdowns as a result of the one issues they knew concerning the locations they’re visiting are unhealthy AI generations,” Lund tweeted.

Lund identified that one thing very comparable occurred within the early 2010s when Chinese language vacationers visited Paris, France. They anticipated Paris to be “like a pristine movie set for a romantic love story,” because the New York Occasions described it in 2014. As a substitute, they have been shocked by “the cigarette butts and canine manure, the impolite insouciance of the locals and the gratuitous public shows of affection.”

Consultants also have a identify for when this occurs, in line with the Occasions:

Psychologists warned that Chinese language vacationers shaken by thieves and dashed expectations have been in danger for Paris Syndrome, a situation by which foreigners endure despair, nervousness, emotions of persecution and even hallucinations when their rosy photographs of Champagne, majestic structure and Monet are upended by the stresses of a metropolis whose natives are additionally identified for being among the many unhappiest folks on the planet.

Given America’s huge cultural footprint, loads of folks most likely have a really totally different concept of the U.S. of their head in comparison with what they’d encounter upon visiting. And when you can blame a variety of that on Hollywood, there appears to be a brand new cultural catfisher on the town.

Generative AI can flip any imaginary place right into a satisfactory actuality with only a few easy textual content prompts. And in the event you go to the actual metropolis of Hollywood as an outsider, put together to mood your expectations. It doesn’t look something just like the utterly fictitious Hollywood Mountain.

14) MyPillow Zombie

Huge-time Trump backer Mike Lindell and MyPillow CEO had plenty of fakes made about him in 2024, together with this one the place he regarded spaced out, with darkish circles underneath his eyes. Nevertheless it’s not actual. The photograph would possibly look oddly real looking, however it’s been altered considerably.

“Assembly Mike Lindell on the Waukesha Trump Rally should’ve been what it was wish to shake arms with the Apostle Paul earlier than Christ took the stage on the sermon on the mound,” an X account credited to somebody named Gary Peterson tweeted.

Assembly Mike Lindell on the Waukesha Trump Rally should’ve been what it was wish to shake arms with the Apostle Paul earlier than Christ took the stage on the sermon on the mound. pic.twitter.com/DMQ0iR2ni7

— Gary Peterson 🇺🇸 (@GaryPetersonUSA) May 1, 2024

Gary Peterson, working underneath the deal with @GaryPetersonUSA, seems to have been the primary to publish the photoshopped picture. Nevertheless it’s an account that regularly publishes altered photographs, usually in a approach that makes it tough to differentiate from an genuine photograph.

The tweet alone racked up over 6 million views, spreading far and wide, leaping to numerous social media companies like Fb and Bluesky, as these items usually do after they go viral.

The place is the picture really from? It seems the picture was initially posted to X by Waukesha, Wisconsin’s The Devil’s Advocate Radio on Might 1 and exhibits Lindell exterior one in every of Donald Trump’s neo-fascist rallies.

You by no means know who you’re gonna meet at a #Trump rally. Tune in immediately @devilradio 3-6 CT for #crute’s unique with My Pillow Man, former crackhead, Mike Lindell. @WAUKradio @MadRadio927 pic.twitter.com/BinfQ3YMGE

— The Satan’s Advocates Radio (@devilradio) May 1, 2024

As you’ll be able to see, the picture doesn’t embrace Lindell with mussed-up hair and heavy, darkish circles underneath his eyes. Lindell additionally has a extra regular smile versus the weird gaze from the altered picture.

Eagle-eyed viewers might have additionally observed one thing else odd concerning the photoshopped picture. If you happen to zoom in over Lindell’s left shoulder you’ll be able to spot what seems to be a roaring bear. It’s not clear why the individuals who made this picture included the bear, however it’s not within the unique picture.

Most main media shops have been laser-focused on generative AI and the ways in which may affect folks and swing voters within the election. However this pretend that includes Lindell was an important reminder that old style photoshopping continues to be round. You don’t want fancy AI to make a convincing (if admittedly perplexing) pretend picture.

15) Luigi’s Pretend Clock

Did you see a mysterious video that includes a countdown clock that presupposed to be from Luigi Mangione, the 26-year-old charged within the killing of UnitedHealthcare CEO Brian Thompson in New York? The video went viral on YouTube, getting consideration on websites like Hacker Information. Nevertheless it’s utterly pretend.

Mangione reportedly possessed a “manifesto” in addition to a ghost gun and was arrested at a McDonald’s in Altoona, Pennsylvania. Not lengthy after that, a video popped up that gave the impression to be from a YouTube account related to Mangione. It opened with the phrases “The Reality” and “If you happen to see this, I’m already underneath arrest.” It featured a countdown clock that first counted from 5 to 1 earlier than flipping to 60 and counting down all the way in which to zero from there.

The decrease proper nook included the phrase “Quickly” and briefly flashed the date Dec. 11 earlier than disappearing once more in lower than a second. It ended with the phrases “All is scheduled, be affected person. Bye for now.”

If you happen to’re curious what the video really regarded like, you’ll be able to test it out right here. YouTube confirmed to Gizmodo that it wasn’t actual.

“We terminated the channel in query for violating our insurance policies protecting impersonation, which prohibit content material supposed to impersonate one other particular person on YouTube,” a spokesperson for the video platform informed Gizmodo over e-mail.

“The channel’s metadata was up to date following widespread reporting of Luigi Mangione’s arrest, together with updates made to the channel identify and deal with,” the spokesperson continued. “Moreover, we terminated 3 different channels owned by the suspect, per our Creator Duty Pointers.”

The spokesperson additionally famous that these accounts had been dormant for months. Who is definitely behind the video? That continues to be unknown. However the viral memes about Mangione aren’t going to cease anytime quickly now that he’s been paraded in entrance of the cameras throughout his extradition to New York.

“Okay, get some snaps of that Mangione character, however you higher not pull any bullshit the place he seems like the topic in a sixteenth century portray referred to as ‘Christ taken on the Backyard of Gethsemane’.”

“Okay, now don’t be mad however…”(picture or embed)

— Jessica Ritchey (@jmritchey.bsky.social) December 19, 2024 at 1:25 PM

Who on the NYPD thought this was a good suggestion?