Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Google I/O is the corporate’s greatest occasion of the 12 months. Even the launch of the Pixel 10 collection later in 2025 gained’t examine in dimension and scope to I/O. This week, I used to be fortunate sufficient to attend I/O on the steps of Google’s Mountain View headquarters, and it was a complete blast.

Sadly, individuals who watch the keynote livestream at residence don’t get to expertise what occurs after Sundar Pichai exits the stage. That’s after I/O will get extremely enjoyable, as a result of you may wander across the grounds and truly check out lots of the upcoming tech and companies Googlers simply gushed about throughout the principle occasion.

Right here, I wish to let you know in regards to the 5 coolest issues I used to be fortunate sufficient to attempt! It’s not the identical as being there, but it surely should do for now.

Lanh Nguyen / Android Authority

At Google I/O 2024, we first noticed hints of a brand new set of AR glasses. Based mostly round one thing referred to as Mission Astra, the glasses appeared like the trendy evolution of Google Glass, the doomed good glasses the corporate tried to launch over 10 years in the past. All we noticed in the course of the 2024 demo, although, was a pre-recorded first-person-view clip of a prototype of those glasses doing cool issues round an workplace, reminiscent of figuring out objects, remembering the place an object as soon as was, and extrapolating complicated concepts from easy drawings. Later that 12 months, we discovered that these glasses run on one thing referred to as Android XR, and we really obtained to see the wearable prototype in promotional movies equipped by Google.

These glasses have been hinted at throughout I/O 2024, however now I obtained to see them on stage and even put on them myself.

This week, Google not solely confirmed off the glasses in real-world on-stage demos, however even gave I/O attendees the possibility to attempt them on. I used to be capable of demo them, and I gotta say: I’m impressed.

Lanh Nguyen / Android Authority

First, almost all the things Google confirmed on stage this 12 months labored for me throughout my demo. I used to be in a position to take a look at objects and converse with Gemini about them, each seeing its responses in textual content kind on the tiny show and listening to the responses blasted into my ears from the audio system within the glasses’ stems. I used to be additionally capable of see turn-by-turn navigation directions and smartphone notifications. I might even take images with a fast faucet on the precise stem (though I’m positive they aren’t nice, contemplating how tiny the digicam sensor is).

Practically all the things Google confirmed in the course of the keynote labored throughout my hands-on demo. That is a rarity!

Though the glasses assist a dwell translation characteristic, I didn’t get to attempt that out. That is seemingly as a result of translation not working fairly as anticipated in the course of the keynote. However hey, they’re prototypes — that’s simply the way it goes, typically.

The one disappointing factor was that the imagery solely appeared in my proper eye. If I closed my proper eye, the imagery vanished, which means these glasses don’t mission onto each eyes concurrently. A Google rep defined to me that that is by design. Android XR can assist units with two screens, one display, and even no screens. It simply so occurred that these prototype glasses have been single-screen models. In different phrases, fashions that really hit retail might need dual-screen assist or may not, so preserve that in thoughts for the longer term.

Sadly, the prototype glasses solely have one show, so should you shut your proper eye, all projections vanish.

Regardless, the glasses labored nicely, felt good on my face, and have the pedigree of Google, Samsung, and Qualcomm behind them (Google growing software program, Samsung growing {hardware}, and Qualcomm offering silicon). Truthfully, it was so thrilling to make use of them and instantly see that these should not Glass 2.0 however a totally realized wearable product.

Hopefully, we’ll study extra about when these glasses (or these provided by XREAL, Warby Parker, and Mild Monster) will really launch, how a lot they’ll price, and in what areas they’ll be out there. I simply hope we gained’t want to attend till Google I/O 2026 for that info.

Lanh Nguyen / Android Authority

The prototype AR glasses weren’t the one Android XR wearables out there at Google I/O. Though we’ve recognized about Mission Moohan for some time now, only a few folks have really been capable of check out Samsung’s premium VR headset. Now, I’m in that small group of oldsters.

The very first thing I seen about Mission Moohan after I put it on my head was how premium and polished the headset is. This isn’t some first-run demo with a number of tough edges to easy out. If I didn’t know higher, I might have assumed this headset was retail-ready — they’re that good.

Mission Moohan already feels full. If it hit retailer cabinets tomorrow, I might purchase it.

The headset match nicely and had a great weight stability, so the entrance didn’t really feel heavier than the again. The battery pack not being within the headset itself had an enormous half on this, however having a cable working down my torso and a battery pack in my pocket was much less annoying than I assumed it will be. What was most vital was that I felt I might put on this headset for hours and nonetheless be comfy, which you can’t say about each headset on the market.

Lanh Nguyen / Android Authority

As soon as I had Mission Moohan on, it was a surprising expertise. The shows inside robotically adjusted themselves for my pupillary distance, which was very handy. And the visible constancy was unimaginable: I had a full shade view of the true world, low-latency, and not one of the blurriness I’ve skilled with different VR techniques.

The show constancy of Mission Moohan was a few of the finest I’ve ever skilled with related headsets.

It was additionally exceptionally simple to regulate the headset utilizing my arms. With Mission Moohan, no controllers are wanted. You’ll be able to management all the things utilizing palm gestures, finger pinches, and hand swipes. It was tremendous intuitive, and I discovered myself comfy with the working system in mere minutes.

In fact, Gemini is the star right here. There’s a button on the highest proper of Mission Moohan that launches Gemini from anywhere inside Android XR. As soon as launched, you can provide instructions, ask questions, or simply have a relaxed dialog. Gemini understands what’s in your display, too, so you may chat with it about no matter it’s you’re doing. A Google rep instructed me how they use this for gaming: if they arrive throughout a troublesome a part of a recreation, they ask Gemini for assist, and it’ll pull up tutorials or guides with out ever needing to depart the sport.

Lanh Nguyen / Android Authority

Talking of gaming, Mission Moohan helps all method of controllers. You’ll be capable of use conventional VR controllers and even one thing less complicated like an Xbox controller. I wasn’t in a position to do this out throughout my quick demo, but it surely made me very enthusiastic about this turning into a real gaming powerhouse.

I did not get to attempt it, however Google assured me that Mission Moohan will assist most gaming controllers, making me very enthusiastic about this turning into my new strategy to recreation.

The deadly flaw right here, although, is identical one we’ve got with the Android XR glasses: we don’t know when Mission Moohan is definitely popping out. We don’t even know its true industrial identify! Google and Samsung say it’s coming this 12 months, however I’m skeptical contemplating how lengthy it’s been since we first noticed the mission introduced and the way little headway we’ve made since (the US’ tariff state of affairs doesn’t assist, both). Nonetheless, at any time when these do land, I will probably be first in line to get them.

Lanh Nguyen / Android Authority

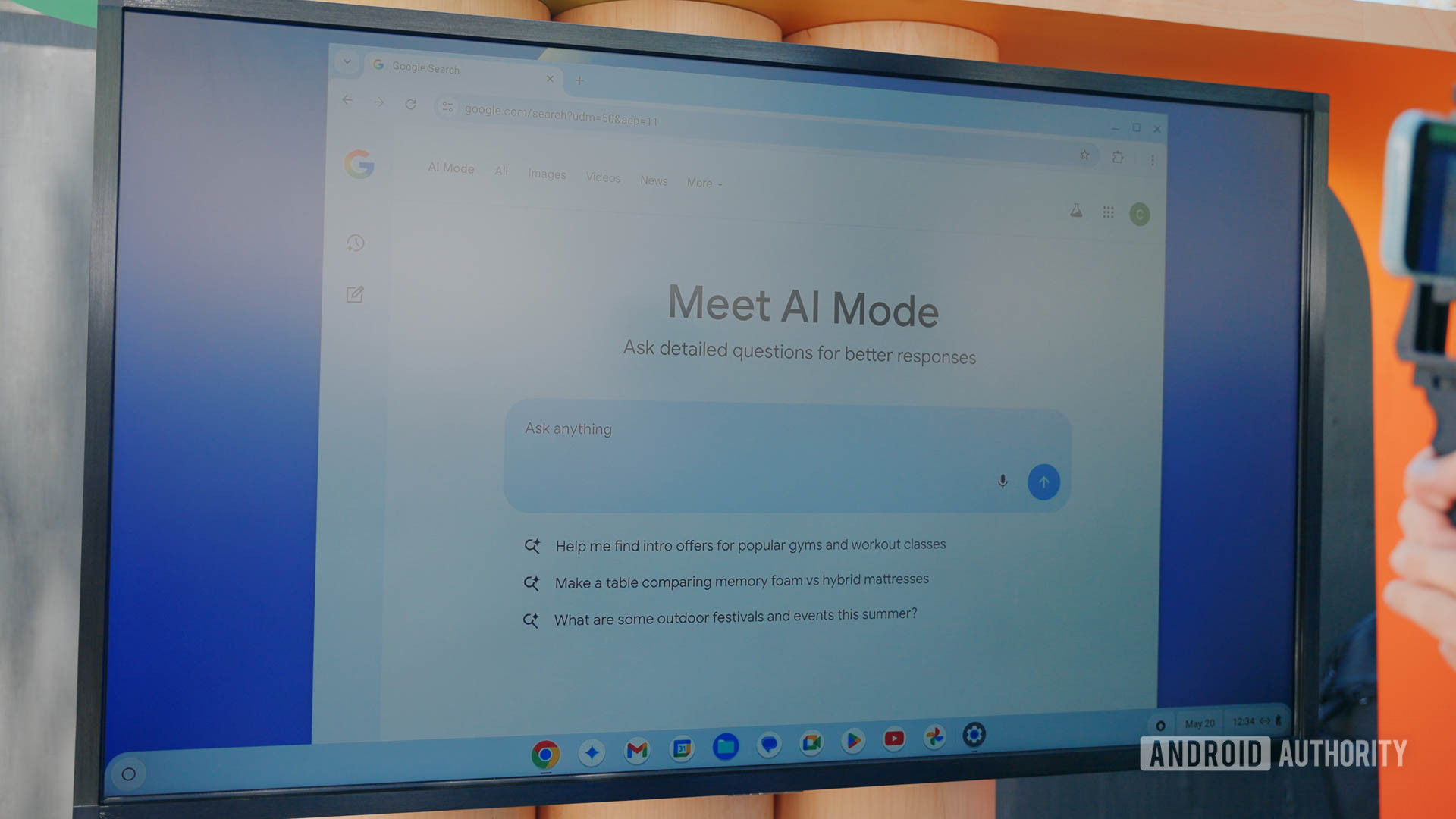

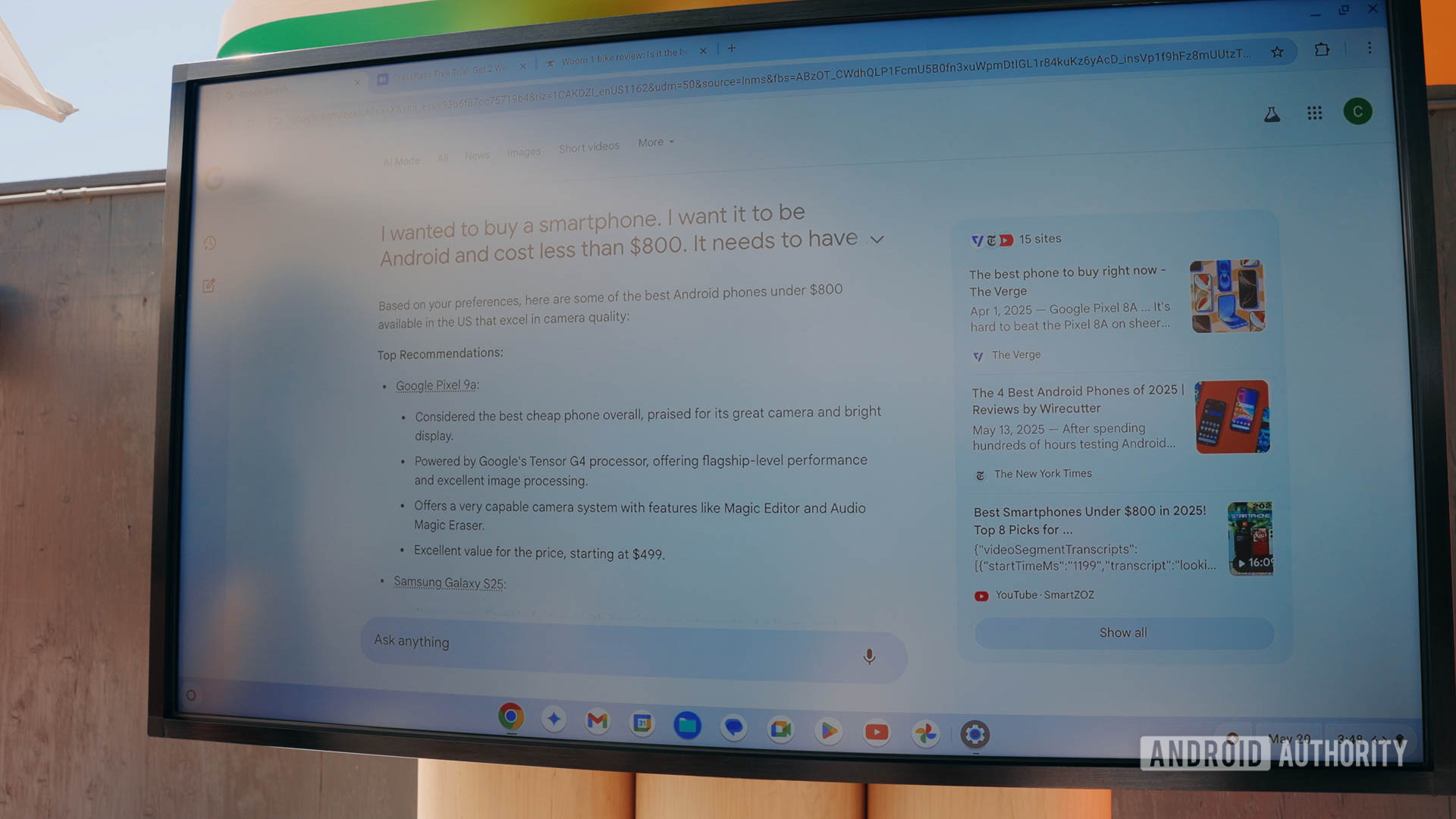

Transferring away from {hardware}, AI Mode was one other star of Google I/O 2025. Consider this as Google Search on AI steroids. As an alternative of typing in a single question and getting again an inventory of outcomes surrounding it, you can provide rather more complicated queries and get a singular web page of outcomes primarily based on a number of searches to offer you an easy-to-understand overview of what you’re making an attempt to analyze.

AI Mode lets you do a number of Google searches directly with prompts so long as you want them to be.

For instance, I used it to hunt for a brand new smartphone. I typed in a reasonably lengthy question about how the telephone wanted to run Android (clearly), price lower than $800, have a great digicam, and be out there in the US. Usually, a easy Google search wouldn’t work for this, however with AI Mode, it obtained proper all the way down to it. It returned a web page to me crammed with good strategies for telephones, together with the Pixel 9a, the Galaxy S25, and the OnePlus 13R — all extremely strong decisions. It even included purchase hyperlinks, YouTube critiques, articles, and extra.

Lanh Nguyen / Android Authority

The cool factor about AI Mode is that you simply don’t want to attend to attempt it for your self. When you dwell within the US and have Search Labs enabled, you need to have already got entry to AI Mode at Google.com (should you don’t, you’ll have it quickly).

Lanh Nguyen / Android Authority

One factor you may’t check out in AI Mode but, although, is Search Reside. This was introduced in the course of the keynote and is coming later this summer time. Basically, Search Reside lets you share with Gemini what’s happening in the true world round you thru your smartphone digicam. If this sounds acquainted, that’s as a result of Gemini Reside on Android already helps this. With Search Reside, although, it’s going to work on any cellular gadget by the Google app, permitting iPhone customers to get in on the enjoyable, too.

With AI Mode you may additionally ultimately discover Search Reside, which lets you present Gemini the true world utilizing your telephone’s digicam.

I attempted out an early model of Search Reside, and it labored simply in addition to Gemini Reside. Will probably be nice for this to be out there to everybody in all places, as it’s a very great tool. Nonetheless, Google is in harmful “characteristic creep” territory now, so hopefully it doesn’t let issues get too complicated for shoppers about the place they should go to get this service.

Lanh Nguyen / Android Authority

Of all the things I noticed at Google I/O this 12 months, Stream was the one which left me essentially the most conflicted. Clearly, I believe it’s tremendous cool (in any other case it wouldn’t be on this record), however I additionally assume it’s type of horrifying.

Stream is a brand new filmmaking instrument that lets you simply generate video clips in succession after which edit these clips on a conventional timeline. For instance, this might will let you create a whole movie scene by scene utilizing nothing however textual content prompts. Once you generate a clip, you may tweak it with further prompts and even prolong it to get extra out of your unique creation.

Stream could possibly be a terrific new filmmaking instrument, or the dying of movie as we all know it.

What’s extra, Stream can even incorporate Veo 3, Google’s newest iteration of its video era system. Veo 3 permits one to create transferring pictures together with music, sound results, and even spoken dialogue. This makes Stream a instrument that would will let you create a full movie out of skinny air.

Lanh Nguyen / Android Authority

Utilizing Stream throughout my demo was really easy. I created some clips of a bugdroid having spaghetti with a cat, and it got here out hilarious and cute. I used to be capable of edit the clip, add extra clips to it, and prolong clips with a couple of mouse clicks and a few extra textual content prompts.

Stream was simple to make use of, perceive, and it labored nicely sufficient, however I could not assist however marvel why it must exist in any respect.

I didn’t get to check out Veo 3 throughout my demo, sadly. This wasn’t due to a limitation of the system however of time: it takes as much as 10 minutes for Veo 3 to create clips. Even when I solely made two clips, it will push my demo time past what’s affordable for an occasion the dimensions of Google I/O.

After I exited the Stream demo, I couldn’t assist however take into consideration Google’s The Wizard of Oz remake for Sphere in Las Vegas. Clearly, Stream isn’t going to have folks recreating traditional movies utilizing AI, but it surely does have the identical problematic air to it. Stream left me feeling elated at how cool it’s and dismayed by how pointless it’s.

Lanh Nguyen / Android Authority

Every part I’ve talked about right here up to now is one thing you’ll really be capable of use sooner or later. This final one, although, is not going to seemingly be one thing you’ll have in your house any time quickly. At Google I/O, I obtained to regulate two robotic arms utilizing solely voice instructions to Gemini.

The 2 arms towered over numerous objects on a desk. By talking right into a microphone, I might inform Gemini to select up an object, transfer objects, place objects into different objects, and many others., and solely use pure language to do it.

It is not every single day I get to inform robots what to do!

The robotic arms weren’t good. If I had them choose up an object and put it right into a container, they might do it — however then they wouldn’t cease. The arms would proceed to select up extra objects and attempt to dump them into the container. Additionally, the arms couldn’t do a number of duties in a single command. For instance, I couldn’t have them put an object right into a container after which choose up the container and dump out the item. That’s two actions and would require two instructions.

Nonetheless, that is the primary time in my life that I’ve been capable of management robots utilizing nothing however my voice. That’s principally the very definition of “cool.”

These have been the good issues I attempted at I/O this 12 months. Which one was your favourite? Let me know within the feedback!